This code performs several tasks related to natural language processing (NLP). It first imports some libraries that are needed for the rest of the code to run. The csv library is not used in this code, so it is not clear why it is being imported.

The nltk (Natural Language Toolkit) library is used to perform tasks related to processing and analyzing human language. The code calls two functions from this library: word_tokenize and pos_tag. word_tokenize is used to split a sentence into individual words, or “tokens,” and pos_tag is used to label each token with its part of speech (e.g., noun, verb, adjective).

The textblob library is used to determine the sentiment (positive or negative emotion) of a sentence. The code calls the TextBlob function from this library and passes it a sentence as an argument. The function returns an object that has a sentiment attribute, which contains information about the polarity (positive or negative) and subjectivity (objective or subjective) of the sentence.

The code then defines a function called analyze_sentence that takes a sentence as an argument and performs three tasks: tokenizing the sentence, labeling the parts of speech of each token, and determining the sentiment of the sentence. It then prints the tokens, part of speech tags, and sentiment of the sentence.

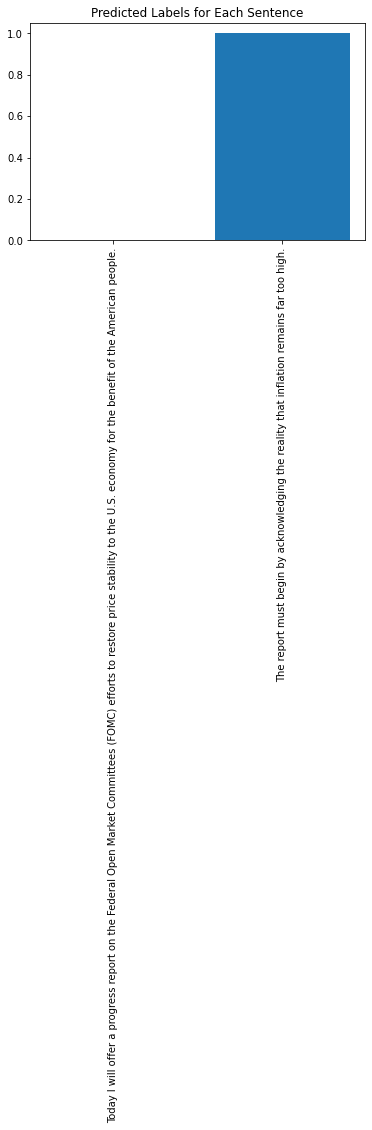

Next, the code defines a sample statement and uses the sent_tokenize function from nltk to split it into individual sentences. It then creates a list of labels for each sentence, with a label of 1 indicating a positive sentiment and a label of 0 indicating a negative sentiment.

The code then uses the CountVectorizer function from the sklearn.feature_extraction.text library to create a matrix of word counts for each sentence. This is a common technique in NLP for representing text data as numerical data that can be used in machine learning models.

The code then creates a logistic regression model from the sklearn.linear_model library and trains it on the data using the fit function. It then uses the trained model to make predictions on the sentences using the predict function.

Finally, the code loops through each sentence and calls the analyze_sentence function on it. It also prints the predicted label for each sentence.

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

Created on Thu Dec 22 05:38:20 2022

@author: ramnot

"""

import csv

import nltk

import textblob

nltk.download('punkt')

nltk.download('averaged_perceptron_tagger')

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.linear_model import LogisticRegression

# Function to analyze the content and tone of a sentence

def analyze_sentence(sentence):

# Tokenize the sentence

tokens = nltk.word_tokenize(sentence)

# Tag the parts of speech of each token

pos_tags = nltk.pos_tag(tokens)

# Use TextBlob to determine the sentiment of the sentence

sentiment = textblob.TextBlob(sentence).sentiment

# Print the tokens, part of speech tags, and sentiment of the sentence

print("Tokens:", tokens)

print("Part of Speech Tags:", pos_tags)

print("Sentiment:", sentiment)

# Example Federal Reserve statement

statement = 'Today I will offer a progress report on the Federal Open Market Committees (FOMC) efforts to restore price stability to the U.S. economy for the benefit of the American people. The report must begin by acknowledging the reality that inflation remains far too high.'

# Split the statement into sentences

sentences = nltk.sent_tokenize(statement)

# Create a list of labels for each sentence (1 for positive, 0 for negative)

labels = []

for sentence in sentences:

if textblob.TextBlob(sentence).sentiment.polarity > 0:

labels.append(1)

else:

labels.append(0)

# Use CountVectorizer to create a matrix of word counts for each sentence

vectorizer = CountVectorizer()

X = vectorizer.fit_transform(sentences)

# Fit a logistic regression model on the data

model = LogisticRegression()

model.fit(X, labels)

# Make predictions on the sentences using the trained model

predictions = model.predict(X)

# Analyze each sentence and print the predicted label

for i in range(len(sentences)):

print("Sentence:", sentences[i])

analyze_sentence(sentences[i])

print("Predicted Label:", predictions[i])

print()