This code is a machine learning script that uses a k-nearest neighbors (KNN) classifier to classify data points into one of three categories: rock formations, bodies of water, and other features. The script first loads some example data and splits it into a training set and a test set. The training set is used to train the KNN classifier, and the test set is used to evaluate the classifier’s performance.

The script then defines a classify_features function, which takes a list of data points and their corresponding predicted labels, and separates them into three lists: rock formations, bodies of water, and other features.

The script then creates a KNN classifier with 4 nearest neighbors, fits it to the training data, and uses it to predict the labels for the test data. It then calls the classify_features function to classify the features in the test data, and prints the number of each type of feature.

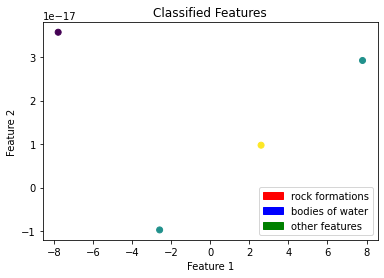

The script also evaluates the classifier’s performance on the test data by calculating the accuracy of the predictions. It then uses a LabelEncoder object to encode the training labels, and reduces the dimensionality of the feature data using principal component analysis (PCA). Finally, it creates a scatter plot of the classified features in the training data, with different colors representing the different classes.

RAMNOT’s Potential Builds:

- Classifying geological features in satellite imagery to identify locations of rock formations and bodies of water.

- Identifying types of land use in aerial photographs, such as forests, agricultural fields, and urban areas.

- Classifying types of objects in images or videos, such as vehicles, pedestrians, and buildings.

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.patches as mpatches

from sklearn.neighbors import KNeighborsClassifier

from sklearn.decomposition import PCA

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import LabelEncoder

def classify_features(data, model, X_test, y_pred):

# Initialize empty lists to store the classified features

rock_formations = []

bodies_of_water = []

other_features = []

# Iterate through the data points and predicted labels

for point, label in zip(data, y_pred):

# Check the predicted label for the current point

if label == 'rock formation':

# Add the point to the list of rock formations

rock_formations.append(point)

elif label == 'body of water':

# Add the point to the list of bodies of water

bodies_of_water.append(point)

else:

# Add the point to the list of other features

other_features.append(point)

# Return the lists of classified features

return rock_formations, bodies_of_water, other_features

def load_data():

# Define the example data

data = [

{'features': [1.0, 2.0, 3.0], 'type': 'rock formation'},

{'features': [4.0, 5.0, 6.0], 'type': 'body of water'},

{'features': [7.0, 8.0, 9.0], 'type': 'other'},

{'features': [10.0, 11.0, 12.0], 'type': 'rock formation'},

{'features': [13.0, 14.0, 15.0], 'type': 'other'},

{'features': [16.0, 17.0, 18.0], 'type': 'body of water'},

]

# Return the example data

return data

# Load the data

data = load_data()

# Extract the feature data and labels from the input data

X = np.array([point['features'] for point in data])

y = np.array([point['type'] for point in data])

# Split the data into a training set and a test set

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Create a KNN classifier with 4 nearest neighbors

model = KNeighborsClassifier(n_neighbors=4)

# Fit the classifier to the training data

model.fit(X_train, y_train)

# Use the model to predict the labels for the test data

y_pred = model.predict(X_test)

# Classify the features in the test data using the model and predicted labels

rock_formations, bodies_of_water, other_features = classify_features(X_test, model, X_test, y_pred)

# Print the number of each type of feature

print(f'Number of rock formations: {len(rock_formations)}')

print(f'Number of bodies of water: {len(bodies_of_water)}')

print(f'Number of other features: {len(other_features)}')

# Evaluate the model's performance on the test data

accuracy = model.score(X_test, y_test)

print(f'Model accuracy: {accuracy:.2f}')

# Create a LabelEncoder object

le = LabelEncoder()

# Fit the LabelEncoder object to the training labels

le.fit(y_train)

# Encode the training labels

y_train_encoded = le.transform(y_train)

# Visualize the classified features in the training data

# Reduce the dimensionality of the feature data using PCA

pca = PCA(n_components=2)

X_train_pca = pca.fit_transform(X_train)

# Create a scatter plot of the feature data

plt.scatter(X_train_pca[:, 0], X_train_pca[:, 1], c=y_train_encoded)

plt.xlabel('Feature 1')

plt.ylabel('Feature 2')

plt.title('Classified Features')

# Add a legend to the plot

rock_formations_patch = mpatches.Patch(color='red', label='rock formations')

bodies_of_water_patch = mpatches.Patch(color='blue', label='bodies of water')

other_features_patch = mpatches.Patch(color='green', label='other features')

plt.legend(handles=[rock_formations_patch, bodies_of_water_patch, other_features_patch])