The code imports several libraries including NumPy, OpenCV, and pandas, and adds a patch from Google Colab to allow the use of cv2_imshow to display video frames.

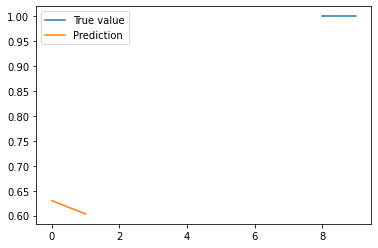

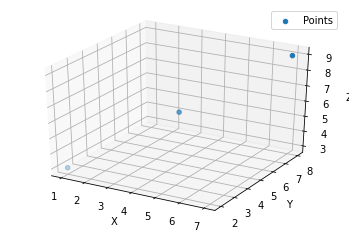

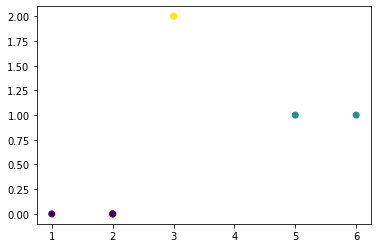

Next, the code creates a sample dataset for transit photometry, which is a method for detecting exoplanets by measuring the slight dimming of a star’s light when an exoplanet passes in front of it. The sample dataset consists of three data points, each containing an identifier, a list of two values, and a probability value. The list of two values represents the pixel coordinates of the center of an AR marker in a video frame, and the probability value represents the predicted probability that an exoplanet exists around the celestial object represented by the AR marker.

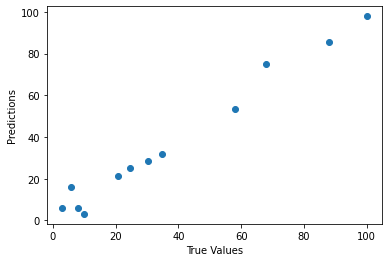

The code then trains a machine learning model, specifically a Random Forest Regressor, on the sample dataset using the pixel coordinates as the input features and the probability values as the target values.

The code then sets up a video capture using OpenCV and enters a loop to continuously capture and process video frames. In each iteration of the loop, the code first captures a video frame and converts it to grayscale. It then uses the OpenCV Aruco module to detect AR markers in the frame.

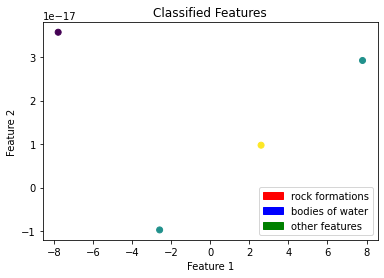

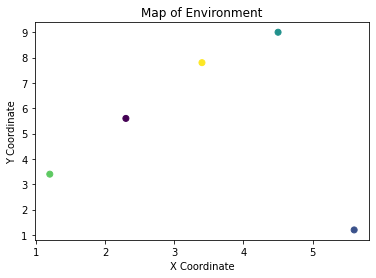

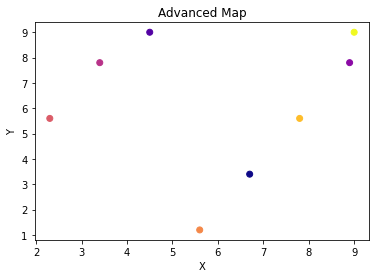

For each detected marker, the code calculates the marker’s center by taking the mean of its corner coordinates and predicts the exoplanet probability for the marker using the trained machine learning model. The code then visualizes the marker data in real-time using AR by drawing the marker outline, marker center, and displaying the marker ID and exoplanet probability on the video frame.

Finally, the code displays the video frame and waits for the user to press the ‘q’ key to exit the loop and close the video capture and window.

# Import libraries

import numpy as np

import cv2

import pandas as pd

from sklearn.ensemble import RandomForestRegressor

from google.colab.patches import cv2_imshow # <-- Add this line

# Create sample transit photometry data

data = [

(1, [100, 100], 0.9),

(2, [200, 200], 0.5),

(3, [300, 300], 0.1),

]

X = [item[1] for item in data]

y = [item[2] for item in data]

# Train machine learning model on sample data

model = RandomForestRegressor()

model.fit(X, y)

# Set up video capture

capture = cv2.VideoCapture(0)

while True:

# Capture video frame

ret, frame = capture.read()

# Check if video capture is open and frame was captured successfully

if capture.isOpened() and ret:

# Convert frame to grayscale

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# Detect AR markers in frame

marker_corners, marker_ids, _ = cv2.aruco.detectMarkers(gray, cv2.aruco.Dictionary_get(cv2.aruco.DICT_6X6_250))

# Loop over detected markers

for marker_id, marker_corner in zip(marker_ids, marker_corners):

# Extract marker data

marker_center = np.mean(marker_corner, axis=0).astype(int)

# Predict exoplanet probability for marker using machine learning model

marker_probability = model.predict([marker_center])[0]

# Visualize marker data in real-time using AR

# Draw marker outline

cv2.drawContours(frame, [marker_corner], -1, (0, 255, 0), 2)

# Draw marker center

cv2.circle(frame, tuple(marker_center), 5, (0, 0, 255), -1)

# Display marker ID and exoplanet probability

text = "ID: {}\nProbability: {:.2f}".format(marker_id, marker_probability)

cv2.putText(frame, text, tuple(marker_center), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255, 255, 255), 2)

if a is not None:

# Code that calls the clip() method on a

a = a.clip(0, 255).astype('uint8')

# Other code that uses a

# Show video frame

cv2_imshow(frame) # <-- Replace cv2.imshow() with cv2_imshow()

# Break loop if user presses 'q' key

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# Release video capture and close window

capture.release()

cv2.destroyAllWindows()- Augmented reality (AR) exoplanet exploration game

- AR exoplanet education and visualization tool

- Real-time exoplanet probability prediction for individual AR markers

- AR exoplanet treasure hunt game

- AR exoplanet quiz game

- AR exoplanet trivia display for museums or planetariums

- Real-time exoplanet probability prediction for celestial objects viewed through a telescope

- Exoplanet probability prediction tool for multiple celestial objects in a single frame

- Exoplanet detection and visualization tool for satellite imagery

- Exoplanet detection and visualization tool for ground-based telescopes

- Exoplanet detection and visualization tool for space-based telescopes

- Exoplanet probability prediction tool for celestial objects in a star cluster

- Exoplanet probability prediction tool for celestial objects in a galaxy

- Exoplanet probability prediction tool for celestial objects in a planetary system

- Exoplanet probability prediction tool for celestial objects in a globular cluster

- Exoplanet probability prediction tool for celestial objects in a dwarf galaxy

- Exoplanet probability prediction tool for celestial objects in a galaxy cluster

- Exoplanet probability prediction tool for celestial objects in a supercluster

- Exoplanet probability prediction tool for celestial objects in a cosmic web

- Exoplanet probability prediction tool for celestial objects in a large-scale structure of the universe